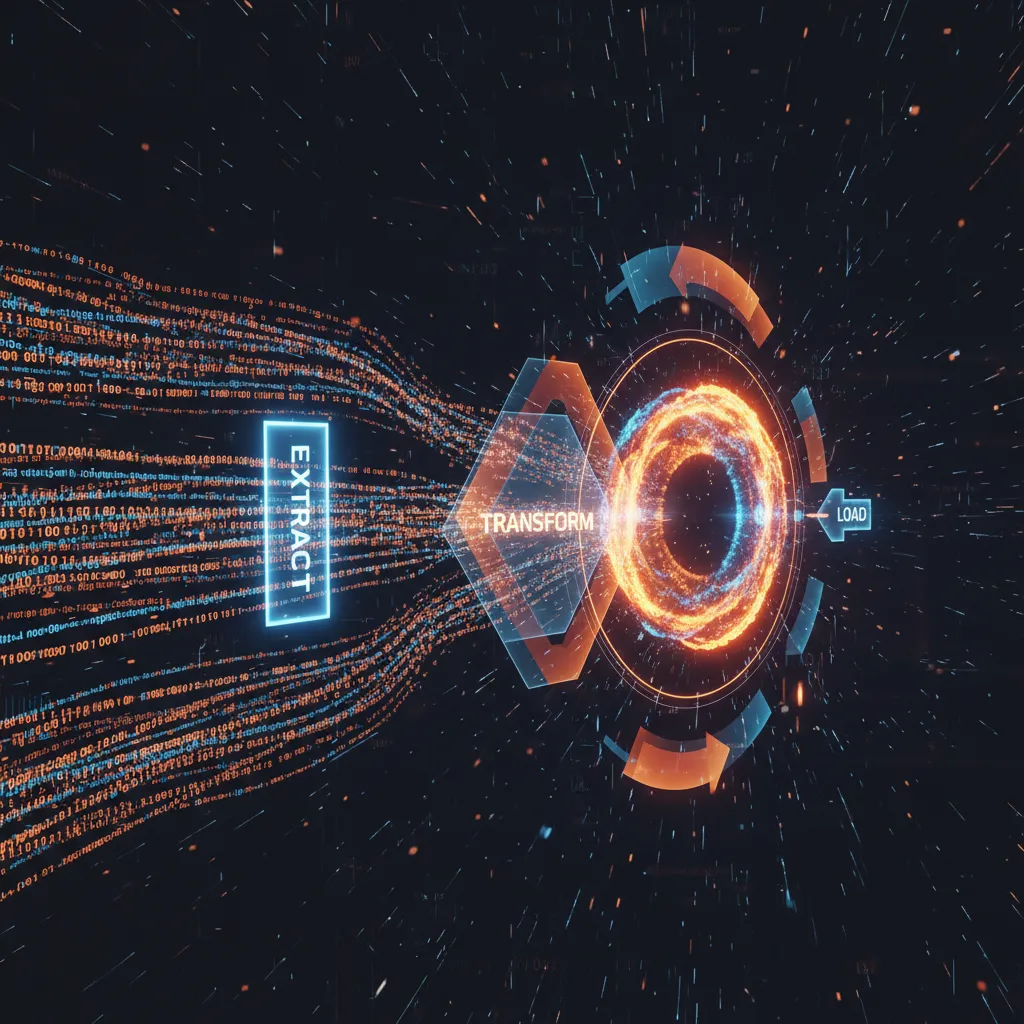

Part 5: Ingestion

Getting data into ClickHouse: INSERTs, Formats, and Integrations.

ClickHouse is hungry. It wants your data, and it wants it fast. In this part, we’ll cover the best ways to feed the beast.

The Golden Rule of Ingestion

Batch Your Inserts!

Never insert row by row. Always insert in large batches (at least 1,000 rows, ideally 10,000+). Each insert creates a file on disk, and too many small files will choke the server.

Formats

ClickHouse supports dozens of formats. The most common ones are:

RowBinary: The fastest format. Use this if you can.

Native: ClickHouse’s internal format. Extremely fast.

JSONEachRow: Great for flexibility and debugging.

- CSV/TSV: Good for legacy data.

Inserting Data

— Standard INSERT INSERT INTO hits (timestamp, user_id, url) VALUES (‘2023-01-01 12:00:00’, 1, ‘/home’), (‘2023-01-01 12:00:01’, 2, ‘/about’);

— Inserting from a file (CLI) clickhouse-client —query “INSERT INTO hits FORMAT CSV” < data.csv

Integrations

You can ingest data from almost anywhere:

Kafka: Use the Kafka table engine for direct streaming.

S3/GCS: Read directly from object storage using the

S3table function.PostgreSQL/MySQL: Replicate data using the

MaterializedPostgreSQLorMySQLengines.

Conclusion

Getting data in is half the battle. Now that we have data, let’s learn how to query it efficiently.